[SSDA][CLS] MARRS: Modern Backbones Assisted Co-training for Rapid and Robust Semi-Supervised Domain Adaptation

[SSDA][CLS] MARRS: Modern Backbones Assisted Co-training for Rapid and Robust Semi-Supervised Domain Adaptation

-

paper : https://openaccess.thecvf.com/content/CVPR2023W/ECV/papers/Jain_MARRS_Modern_Backbones_Assisted_Co-Training_for_Rapid_and_Robust_Semi-Supervised_CVPRW_2023_paper.pdf

-

CVPRW 2023 accepted (인용수: 0회, ‘23.10.30기준)

-

downsteram task : SSDA for Classification

-

Motivation

- SSDA for classification에 사용되던 baseline 모델 (Res-34)이 out-dated임

- 단순한 SSL기법 적용한 최신 모델을 활용해서 (CovNext-L) baseline모델 상회

-

Contribution

-

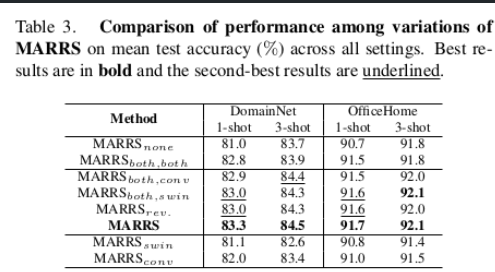

SSDA task에 최신 CNN, Vistion-Transformer 모델을 활용한 Co-training 기반 backbone으로 교체 적용

-

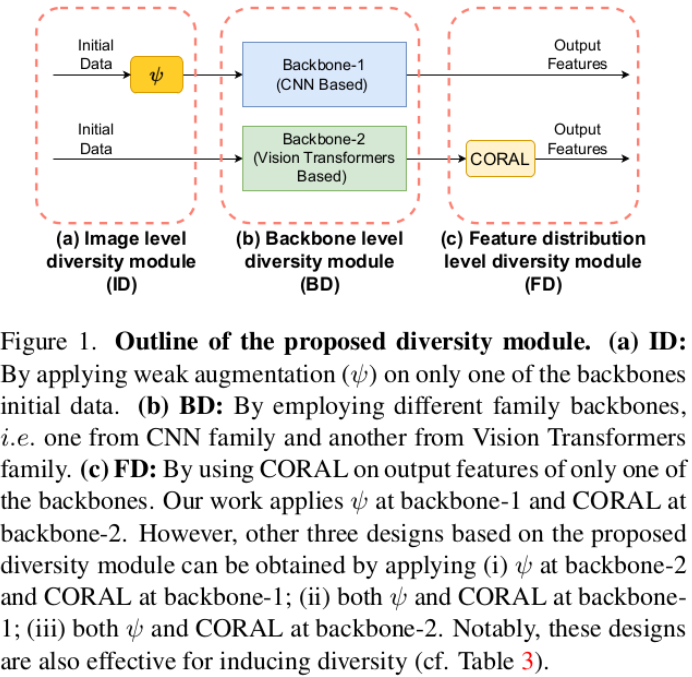

Diverse feature 학습을 위해 3가지 모듈 적용 (image-level, feature-level, backbone-level)

-

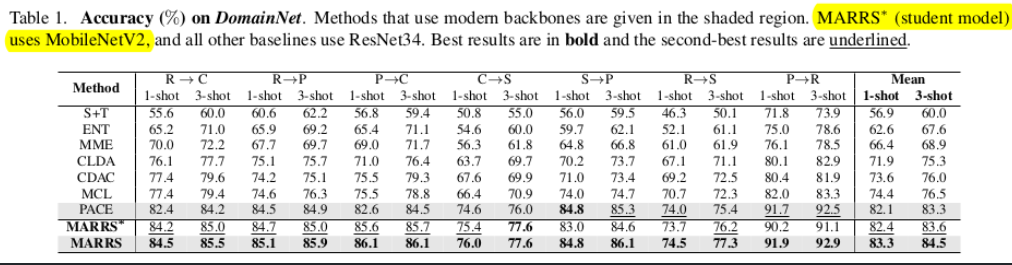

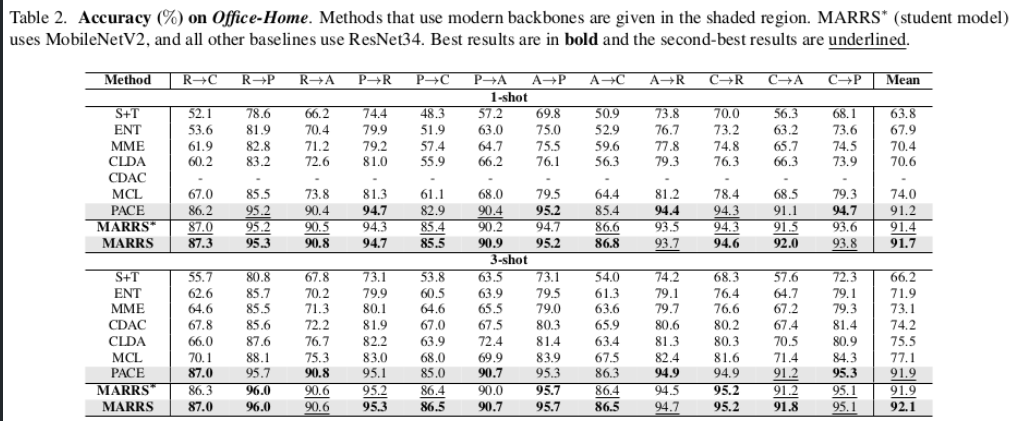

SSDA Datazset SOTA 달성

-

KD 활용한 Mbv2 모델로도 SSDA Dataset SOTA달성

-

-

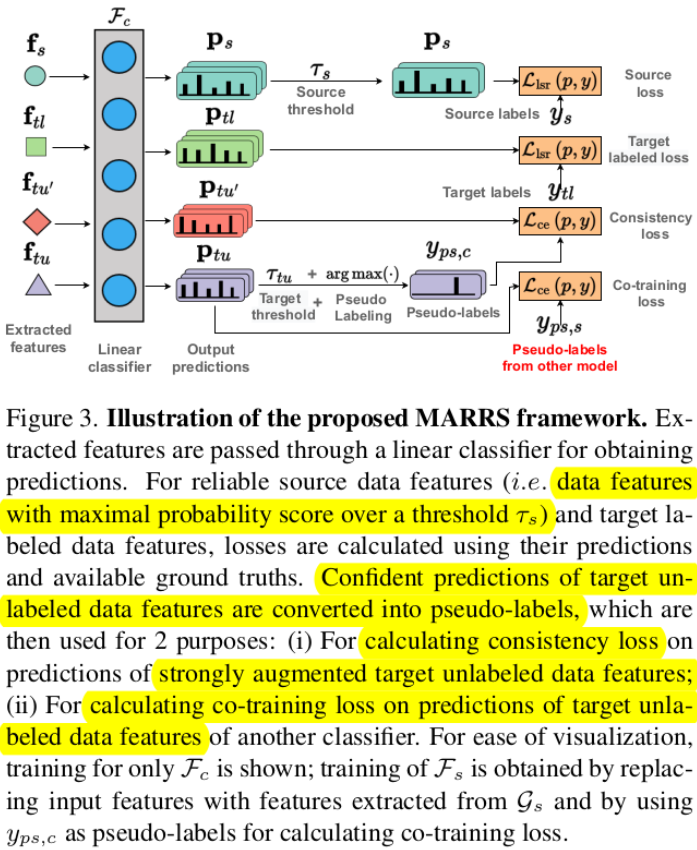

Overview

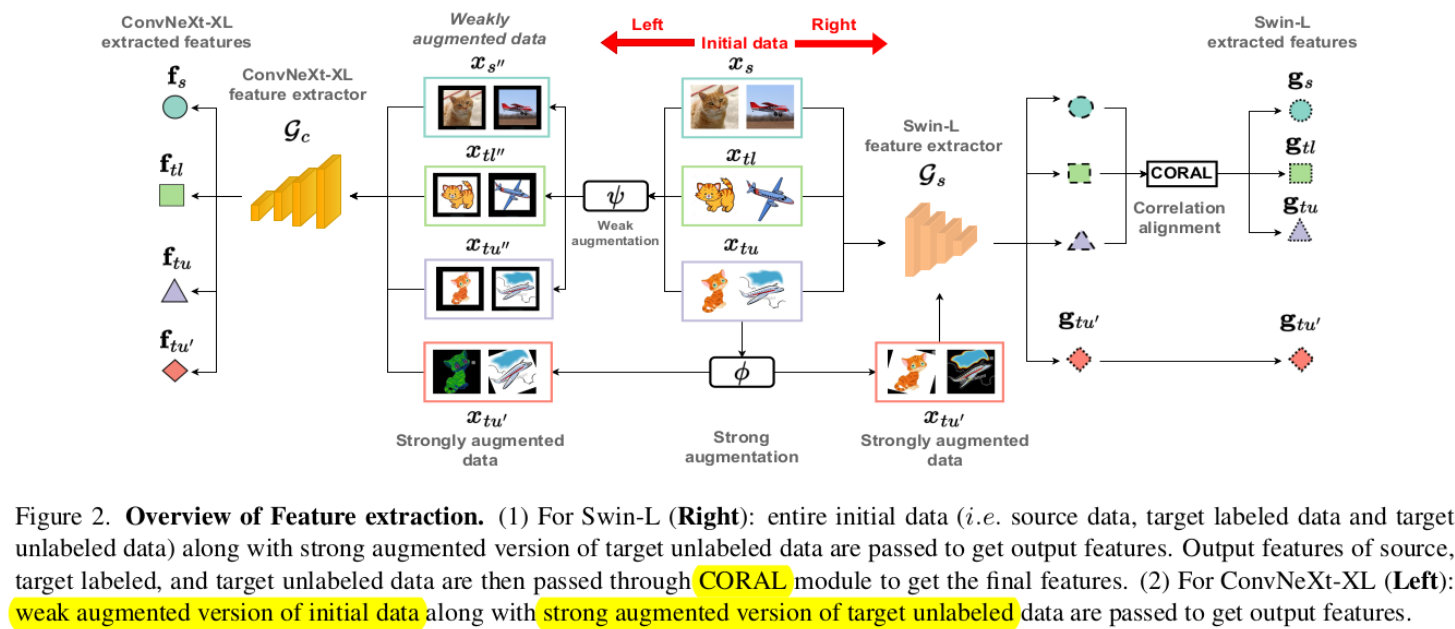

Feature Extraction

- Backbone level diversity Module (BD)

- CNN based : ConvNext-XL

- Vistion-Transformer based : Swin-L

- Image level diversity Module (ID)

- training 시 weak-aug (psi)를 한쪽 모델에 주입

- Feature distribution level diversity Module (FD)

- CORAL 을 한쪽 모델 출력에 연결

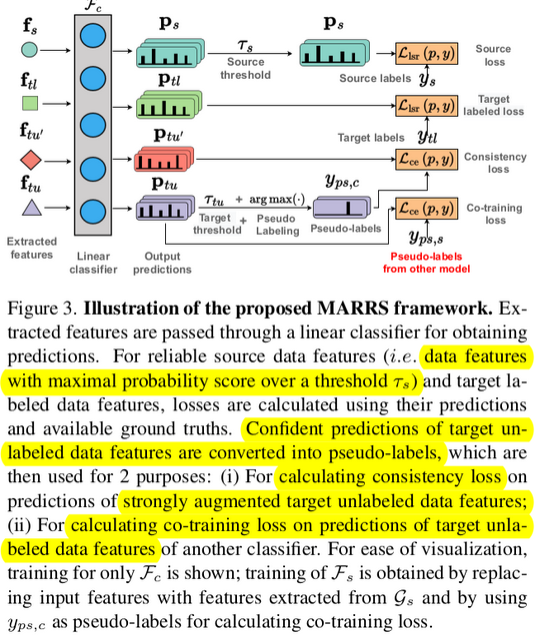

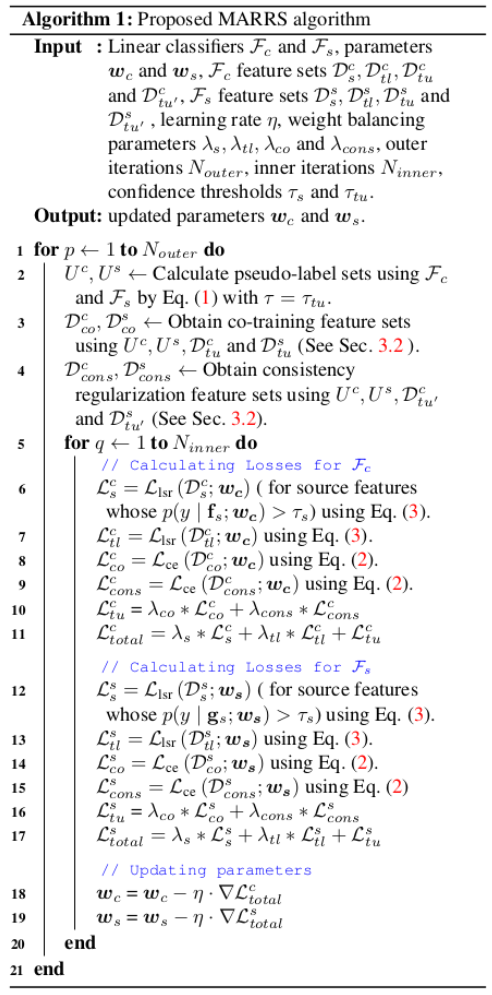

Classifier Training

-

각 backbone의 confidence prediction을 pseudo-gt로 활용해서 학습 → Co-Training

-

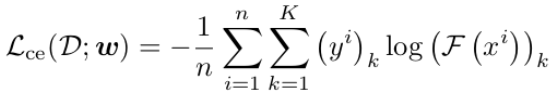

Loss

-

Supervised Loss : Labeled source db + Labeled target db (CE Loss)

- n : D dataset에 속한 sample 갯수

- K : Class 갯수

- F : classifier F의 출력

- Label smoothing을 통해 source에 over-confidence 방지

-

Unsupervised Loss

- Unlabeled target db (CELoss) : Co-training

- Consistency regularization Loss : Strong-aug feature와 original feature간의 일치하도록 강제

- Strong Aug = RandAugment 사용

-

-

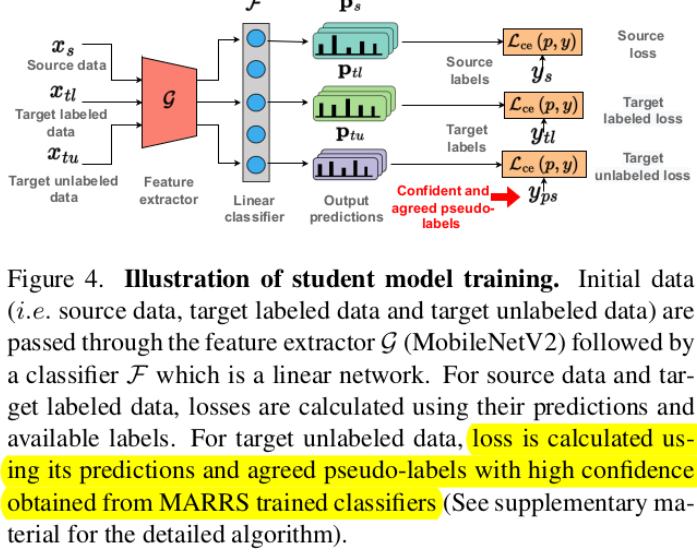

KD

- Inference 속도 향상을 위해 적용

- Teacher : Swin-L + CovNext-XL (MARRS)

- Student : MobileNet-V2

- Inference 속도 향상을 위해 적용

-

Algorithm

-

Experiment

-

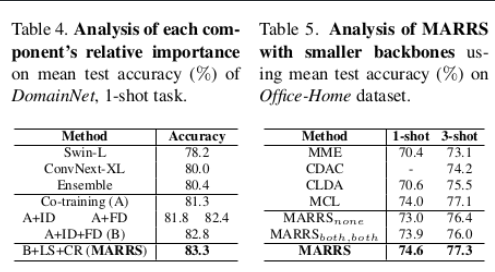

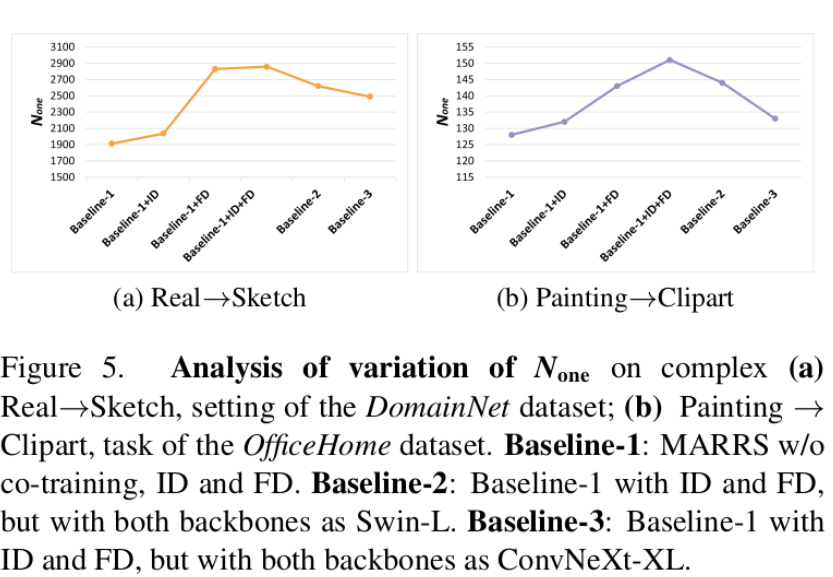

Ablation Studies

-

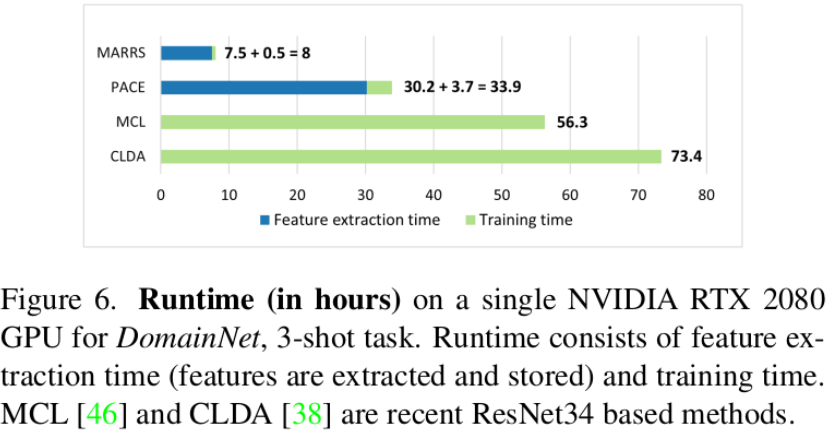

Inference Time